Problem Solving

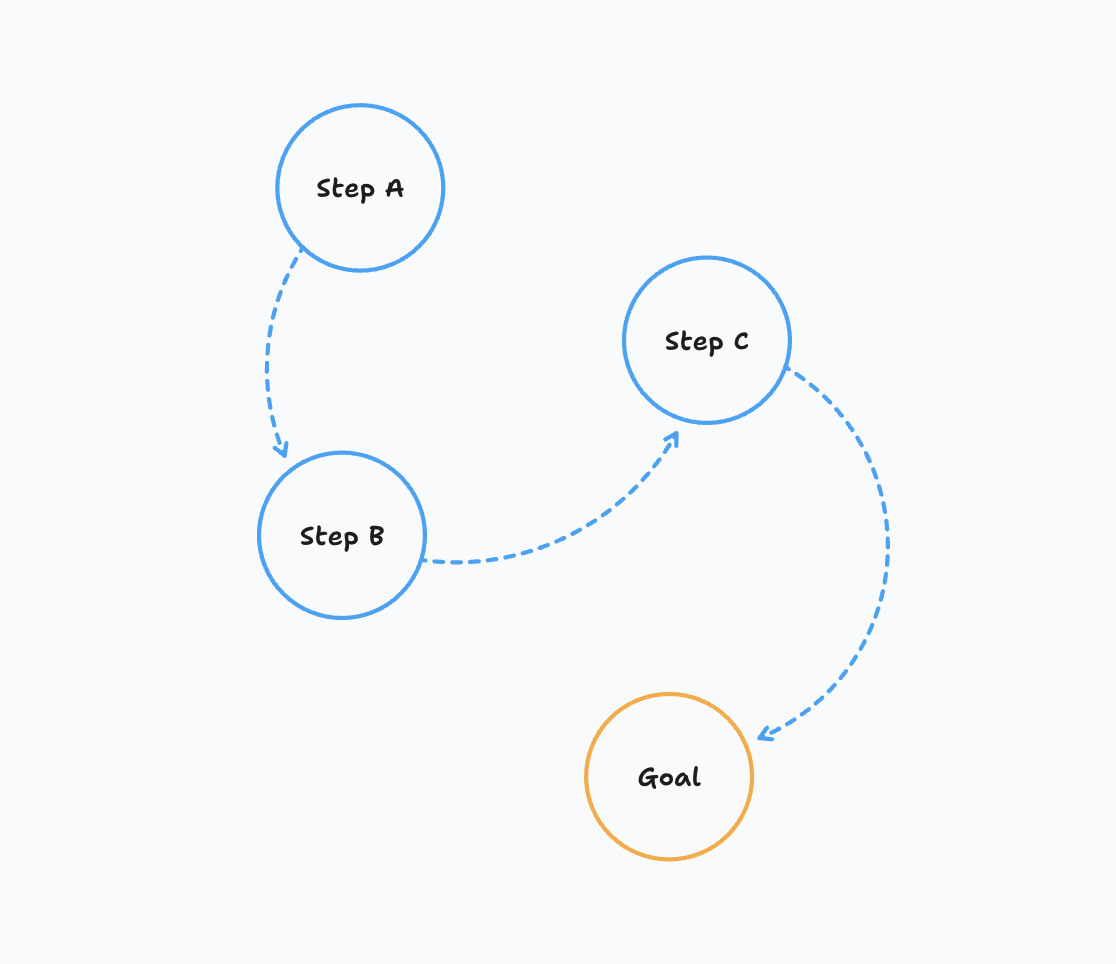

Problem solving involves doing multistep actions to achieve a goal.

The agent needs to be able to plan ahead and make decisions based on the current state of the world. And adjust the plan according to the new observations.

Action Space

At any moment the agent can do a huge amount of actions and the problem is to decide which ones to execute.

Even bigger problem is that usually the solution is a combination of actions, and there is an infinite number of possible combinations, leading to a Combinatorial Explosion problem.

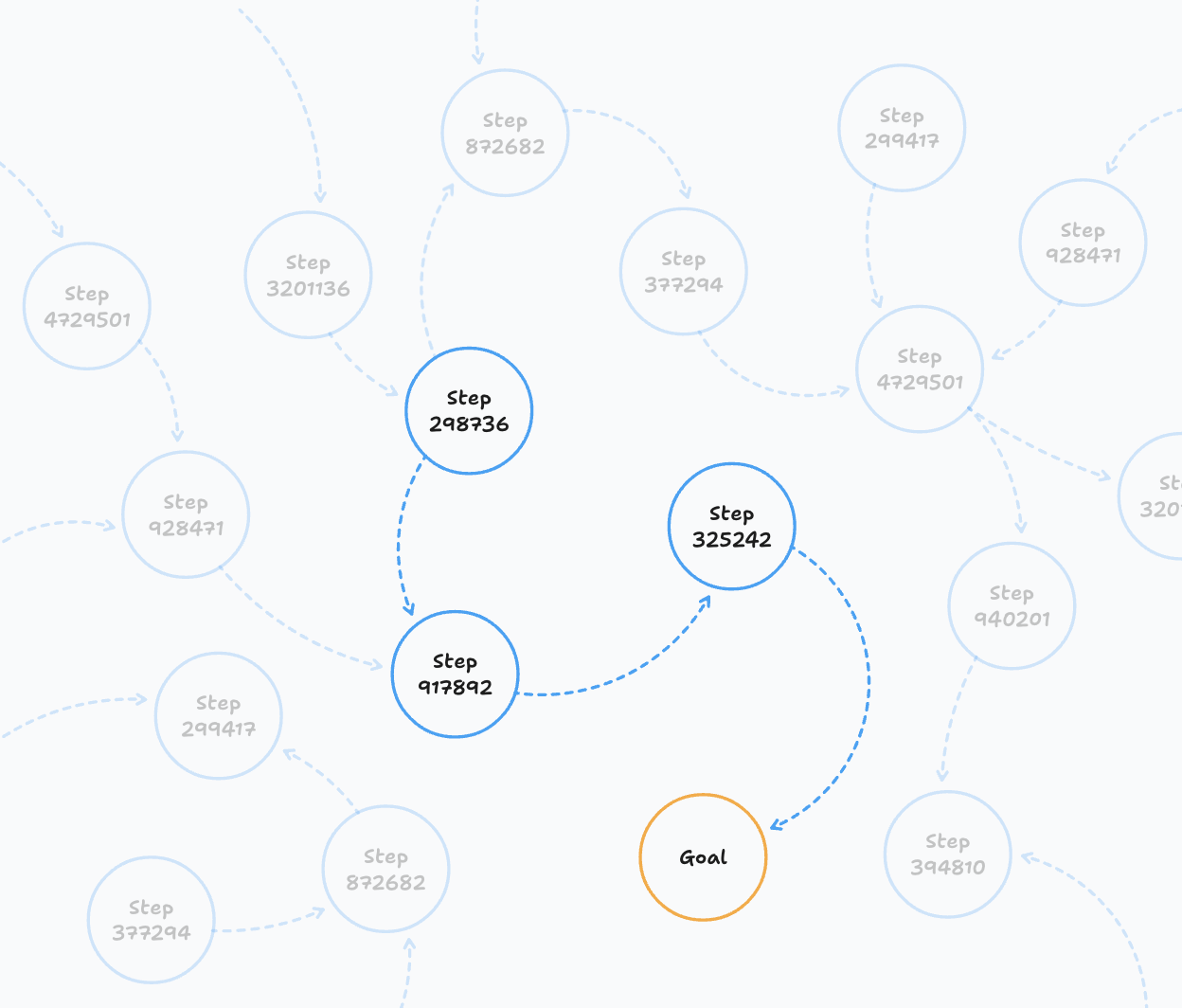

Related Actions

One way to reduce the action space is to notice that many action sequences are used over and over again. And if we are doing the first action from the sequence, there is a good chance that we will need to do the second action next.

It's unusual to go brush your teeth while cooking a salad. We can greatly narrow search space by only considering the actions that are linked to the current action/state.

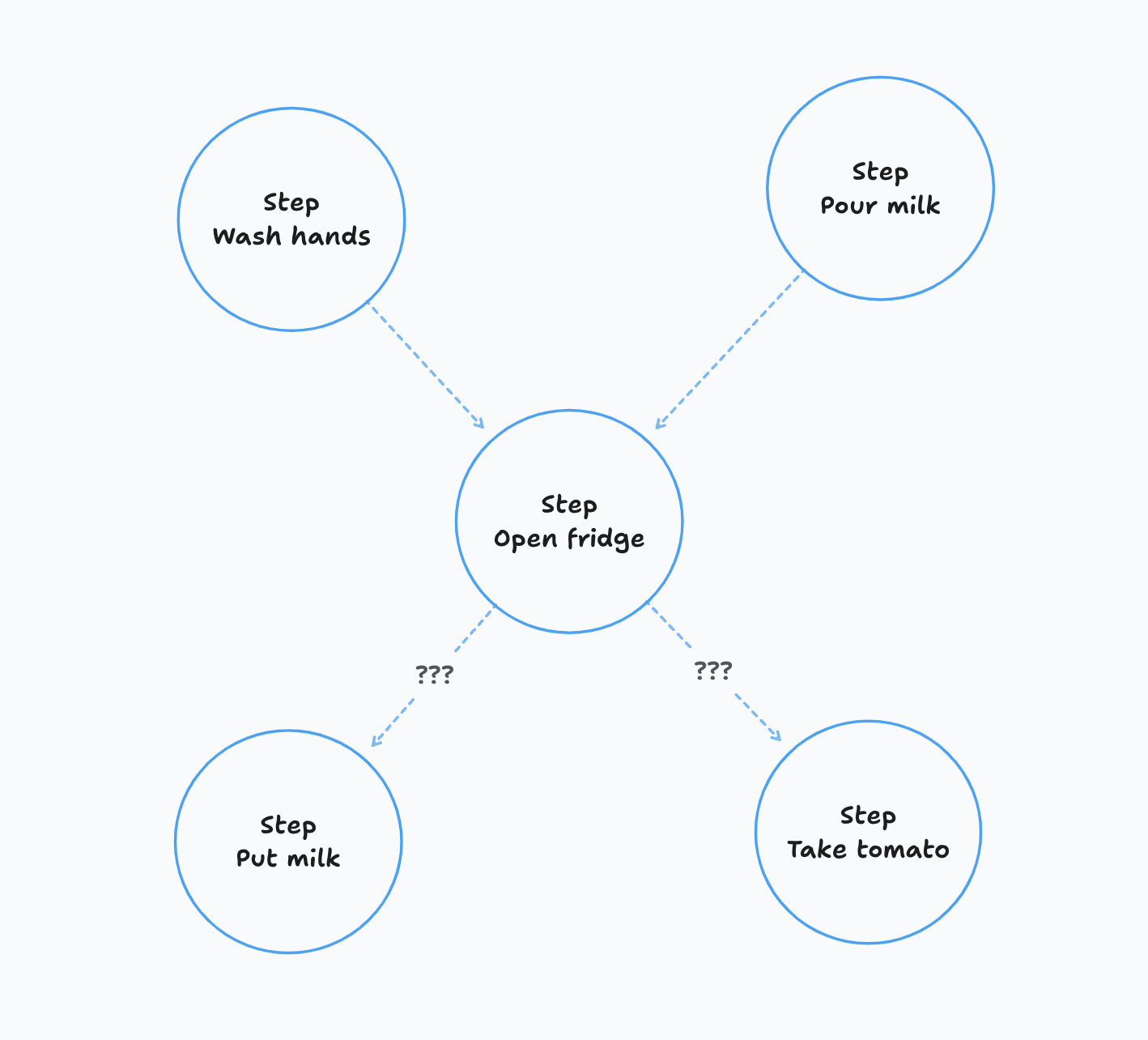

Sequence Crossing

It is common to have the same action in different sequnces. For example we might open fridge to put something in it or to take something out. And the likelyhood of the next action depends on what we were doing before.

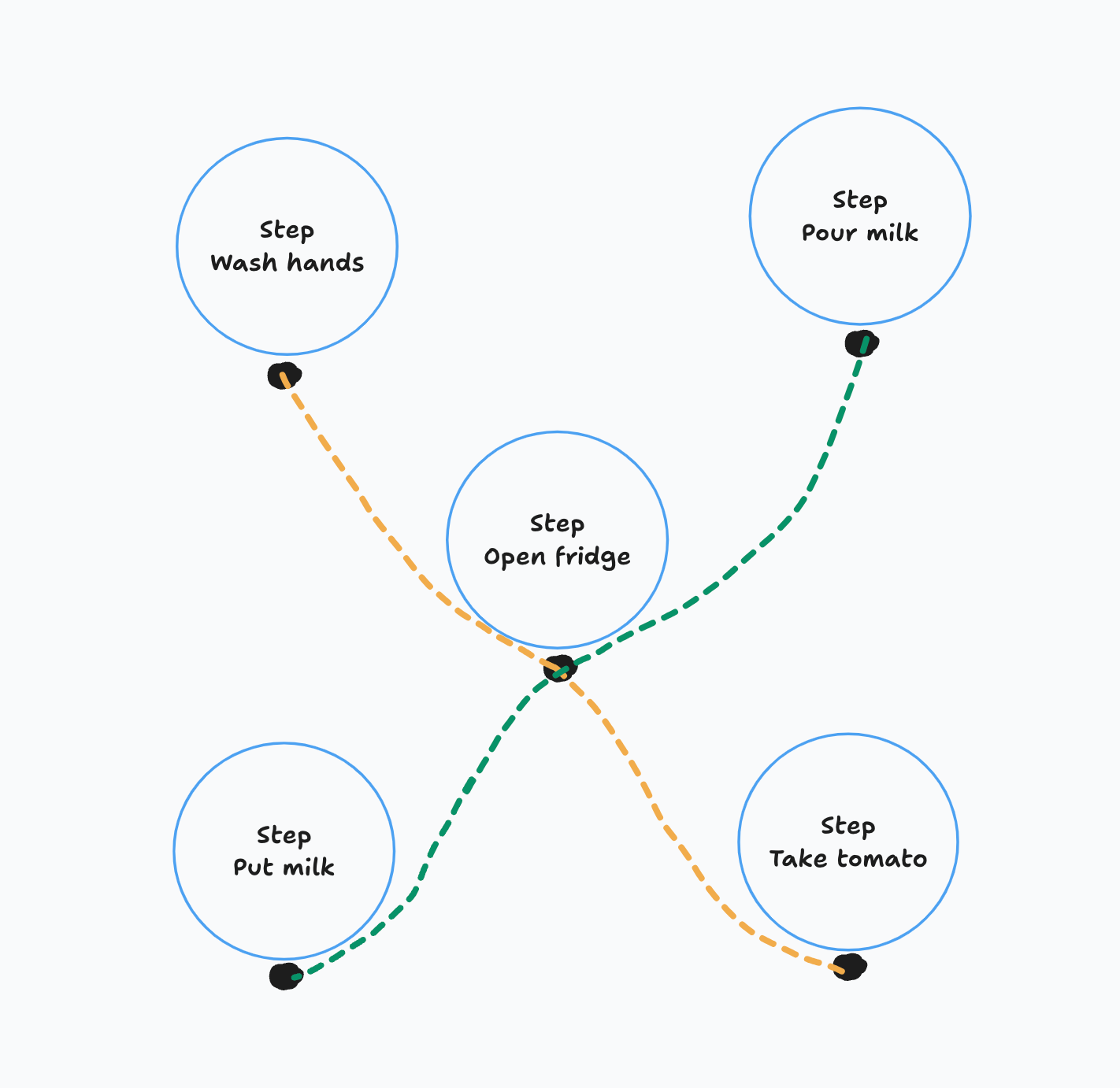

Action Paths

So instead of having energy for links between actions we can add paths that show action sequences that are regularly executed together.

For example here we have two paths - "Wash hands, Open fridge, Take tomato" and "Pour milk, Open fridge, Put milk". If we poured milk, then after opening the fridge we will put the milk into it.

Reasoning

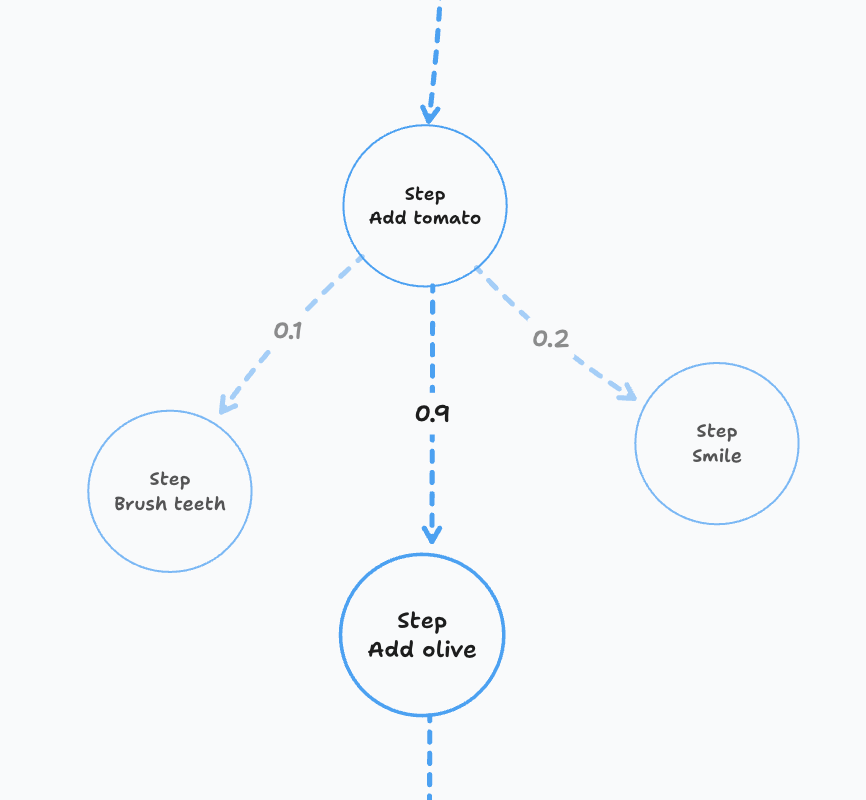

Agent can't always take actions based on what worked previously.

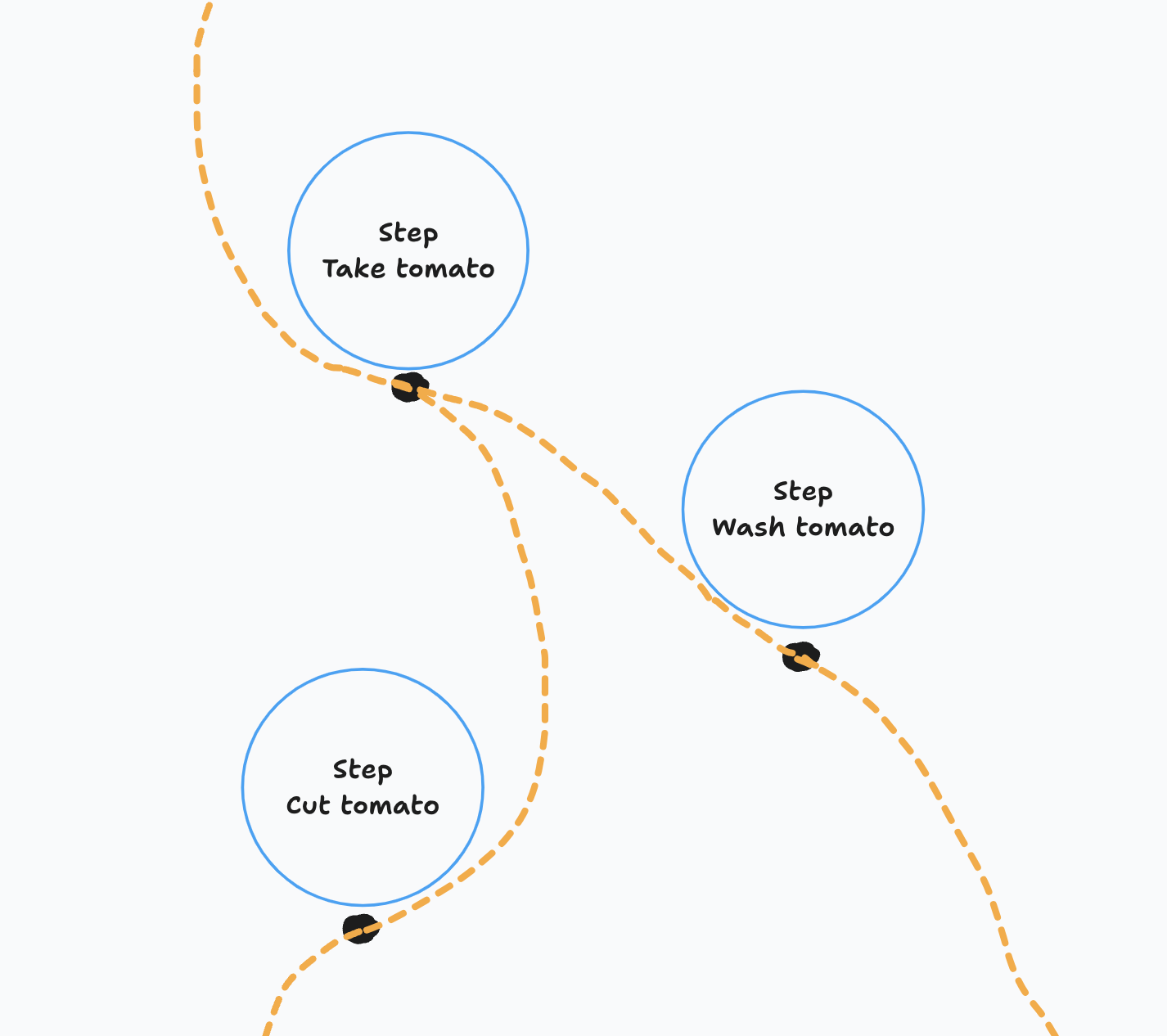

Often actions depend on the current state of the world. This can be represented with a branching paths.

For example in this image after taking the tomato the agent cut it or wash it first. Depending on whether the tomato is clean or not.

since it's not clear how to learn the branching paths. Instead we have overlapping paths and both are active at the same time. We may jump from a path to another path if it's more relevant to the context.

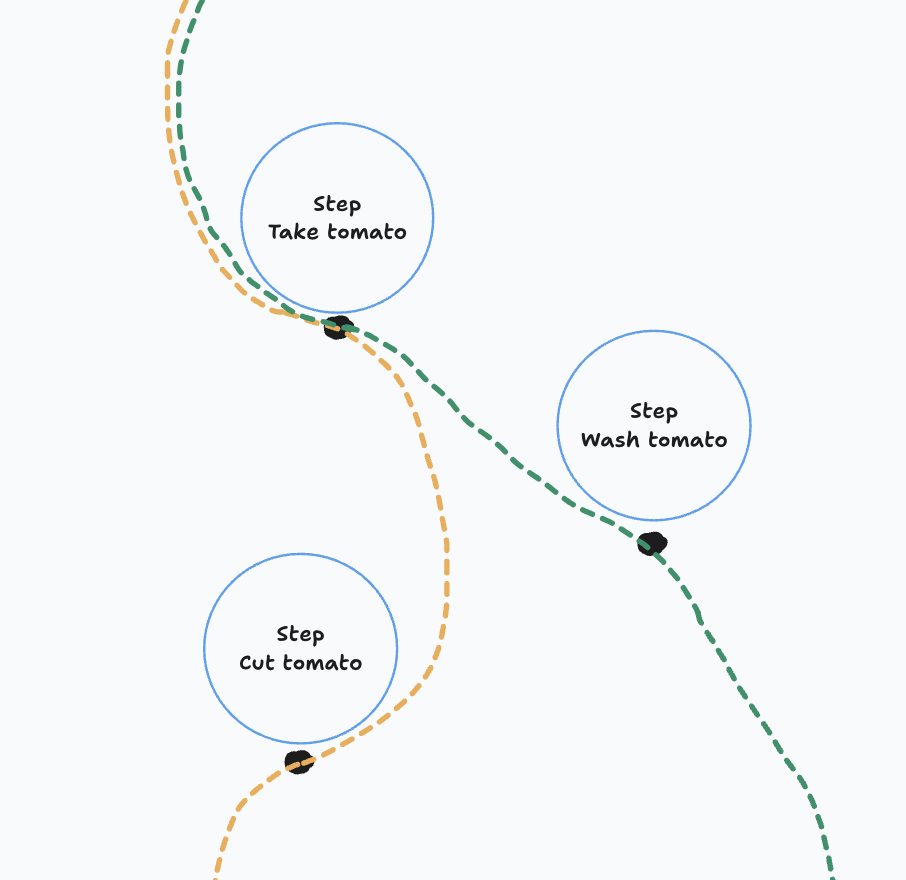

Overlaping Paths

Branching paths will introduce a lot of complexity to the learning process. Instead we have overlapping paths and both are active at the same time. We may jump from a path to another path if it's more relevant to the context.

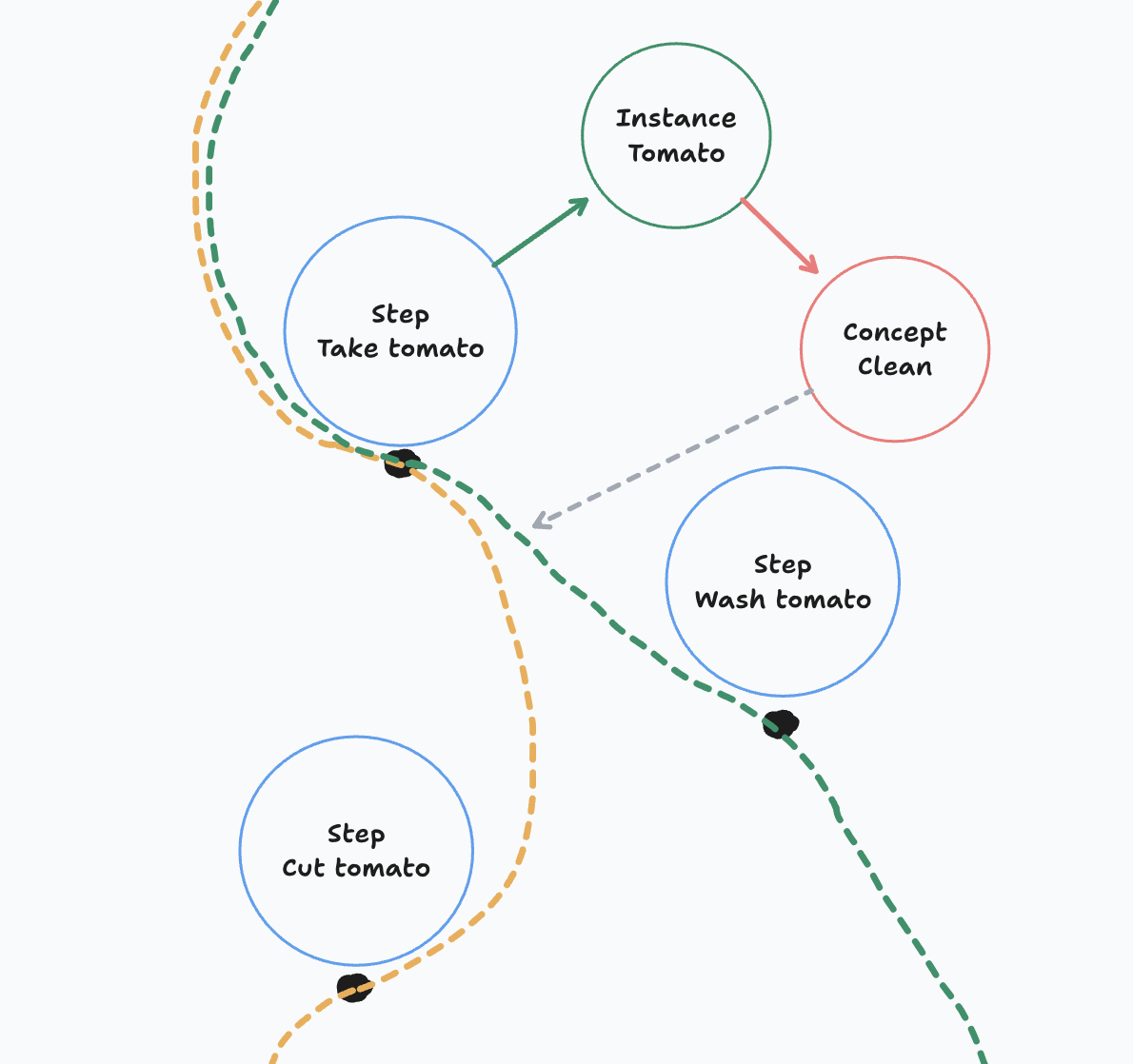

World State

World state in this context reffers to the modeled version of the world. For example when taking the tomato the agent will have represent this tomato in its world model.

Then based on the properties of the tomato it will either think the tomato is clean or dirty. The concept of Dirty is associated with the branch of washing the tomato, adding more weight to that branch.

This is schematically represented on the image.

Learning

The agent observes other agents and learns from them. Sequences of actions that they take create a new path in the agent's memory.