Pain Driven Learning

Real beings learnt to know what's good and what's bad using evolution.

Beings that learned to react to pain survived longer.

Beings that associated bad things with future pain could avoid it.

We give our agent a pain signal, so that it can predict it and react to it.

Events Causality

If the agent notices that some action is causing pain, then it should learn to associate the action with the pain.

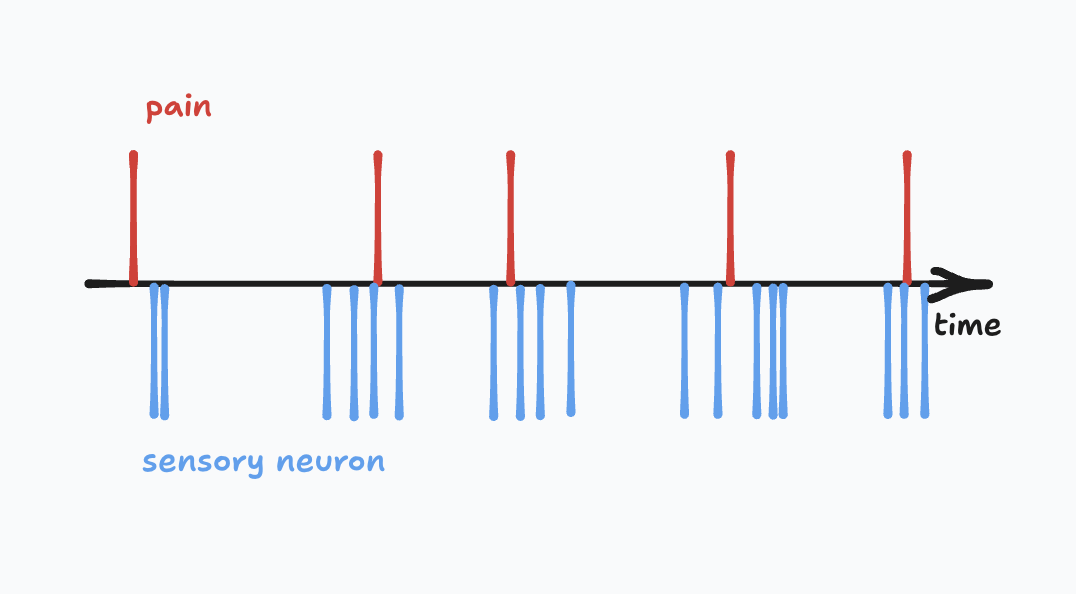

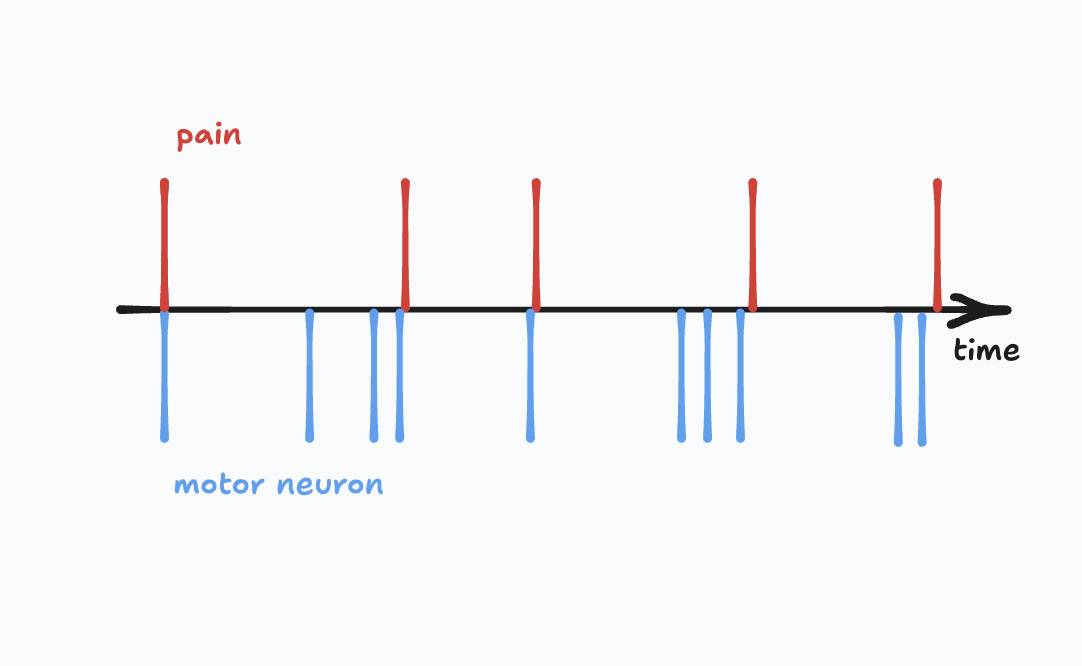

In order to do that, the agent collects statistics about what neurons correlate with pain.

Random Signals

There will be a lot of random signals that agent will need to learn to ignore.

The more frequent the random signal is, the more likely it will coocur with the pain in some time.

Our goal is to quantify how good the signal is predicting pain and how much of it is random.

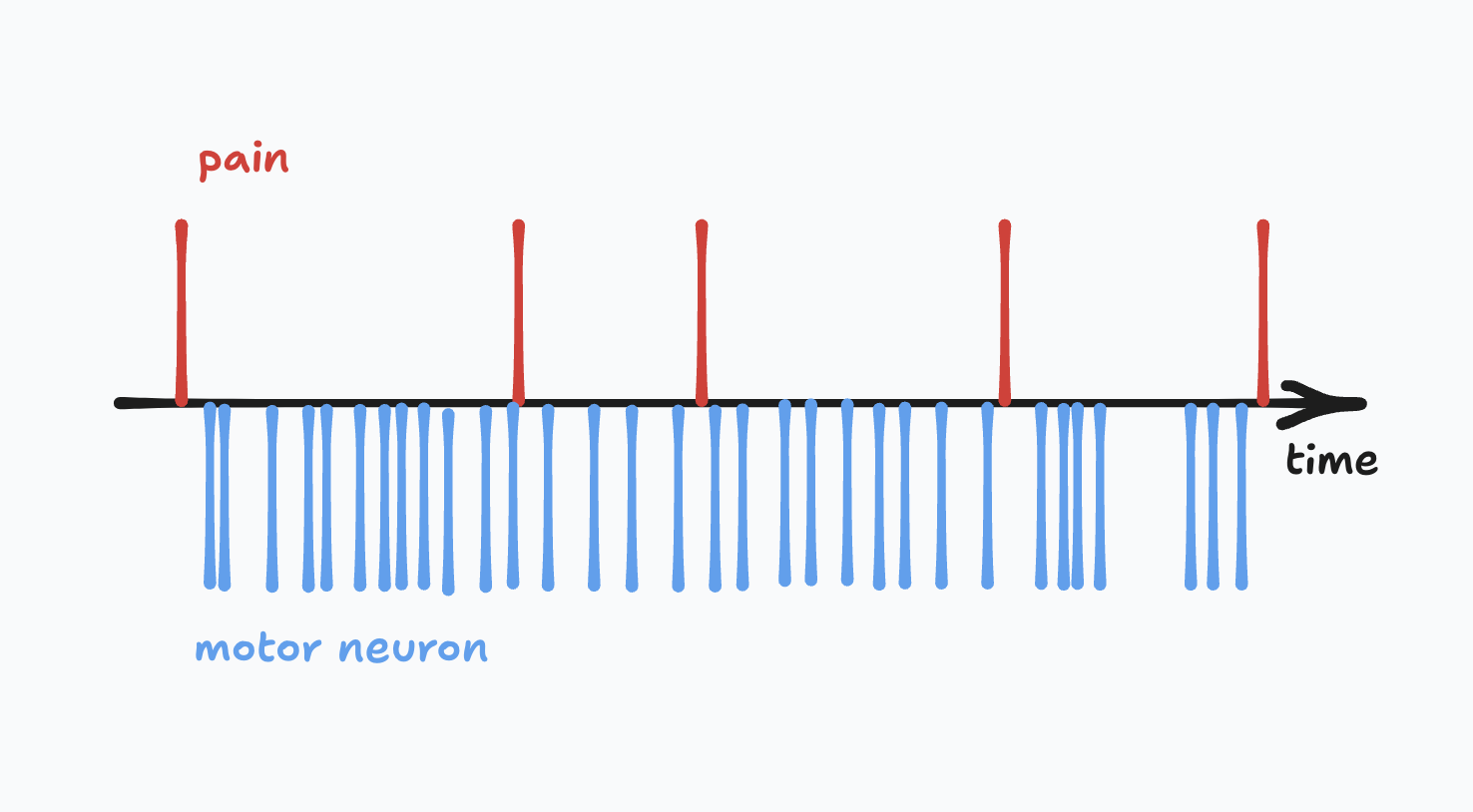

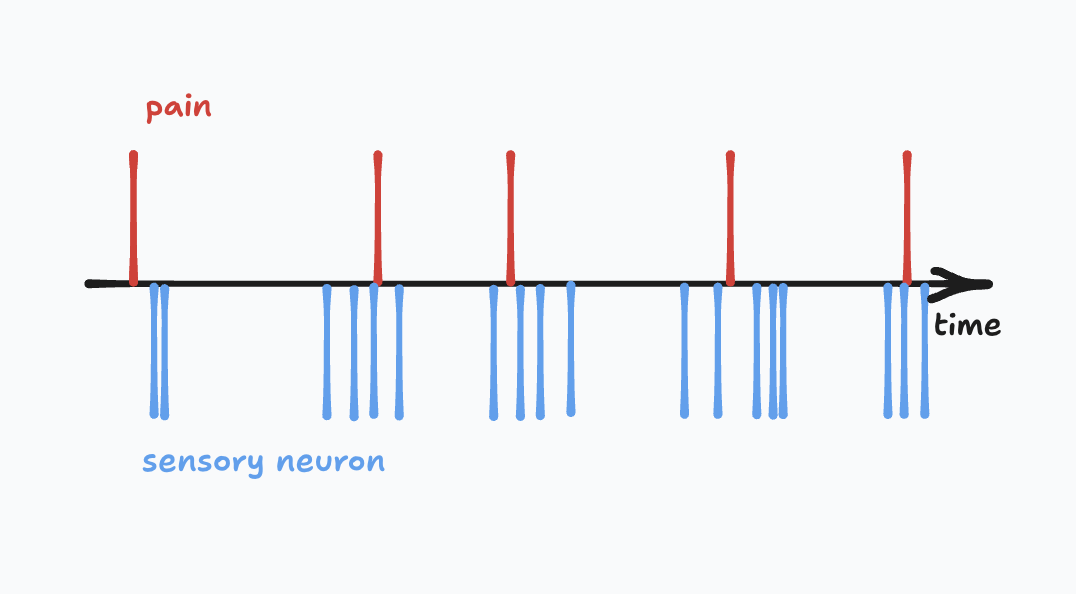

Dense Pain Signals

Same problem occurs when pain is firing more frequently than the neuron singals.

On the image you may notice that the signal is perfectly ligned up with the pain, but only explains a small part of it.

This is still very useful because we will be able to combine multiple signals with a custom logic network and try to explain more of the pain.

Correlation

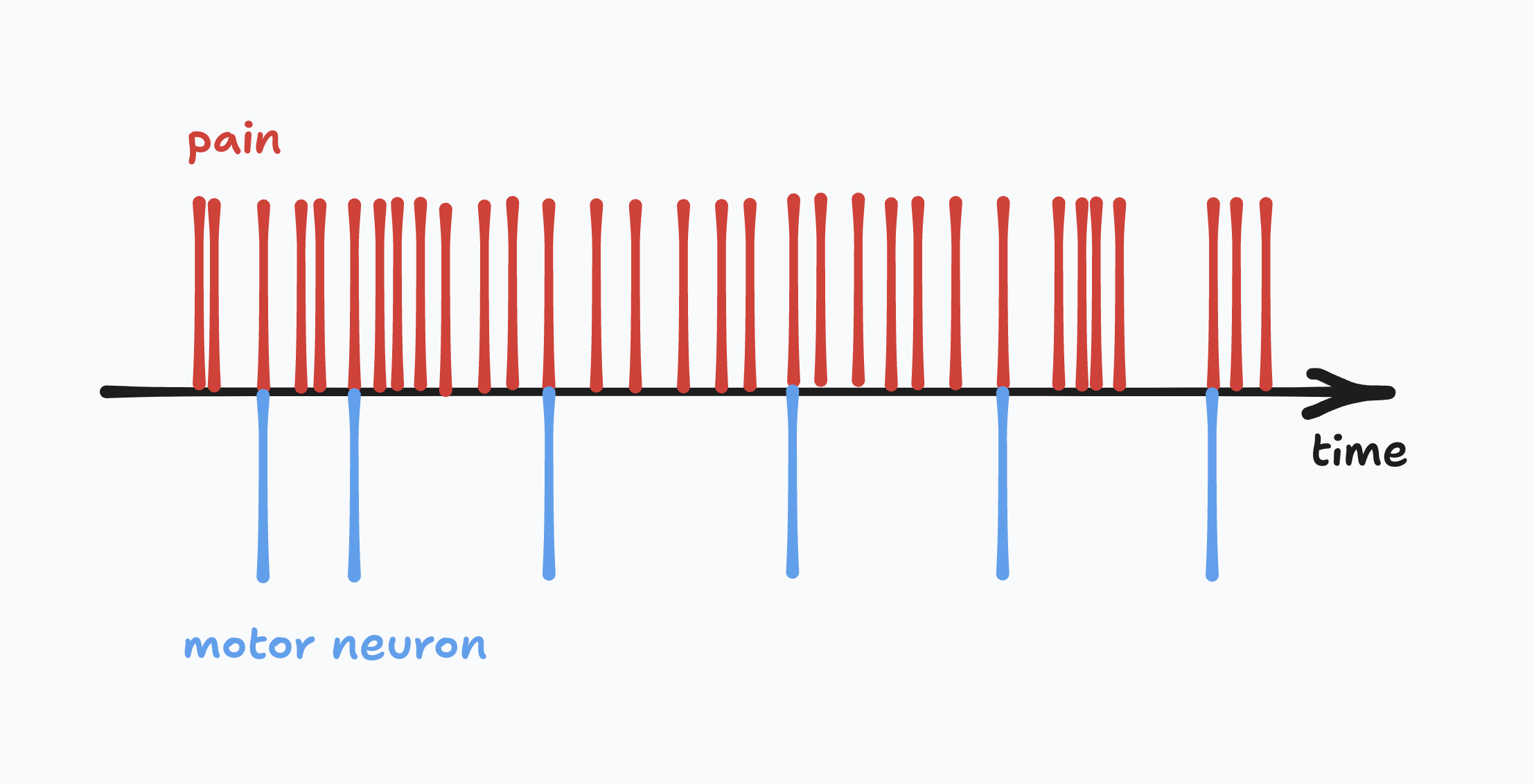

Apart from being able to predict what causes pain in our actions, we also need to associate what sensory input is associated with pain.

This allows the agent to anticipate pain and react to it.

To be able to learn correlations we need to learn what comes before and after then pain (for motor neurons we were only interested in causality).

Logic Input Information

A logic AND gate takes two inputs. If the two inputs have a lot of mutual information, then this logic gate will be useless, since the output will be very close to the inputs.

Same is for other logic gates. So the learning algorithm should destroy neurons with inputs that share a lot of mutual information.

Another option is to not create such neurons in the first place, but that would require knowing pair-wise mutual information for the whole network (or its partitions). On the other hand, calculating the mutual information for each neurons input requires linear time in the number of neurons.